Hi3751V900

8KP120 flagship TV SoC- Applications

- Products

- Support

- About HiSilicon

Search

No Result

Avs3 Video Codec Technology

AVS3: Tailor-Made for 8K Applications

Trends

Challenges

Highlights

In-depth Analysis

Benefits

Industry Applications

HiSilicon Solutions

News

Recommended

Videos Are the Dominant Content Medium in the AI Era

Videos are an important source and carrier of data in the AI era. Video applications have become ubiquitous with the growth of the mobile Internet. Live video streaming, video on demand (VOD), short videos, and video chatting have revolutionized daily life. In the future, over 80% of public network traffic and over 70% of industry application data will come from videos.

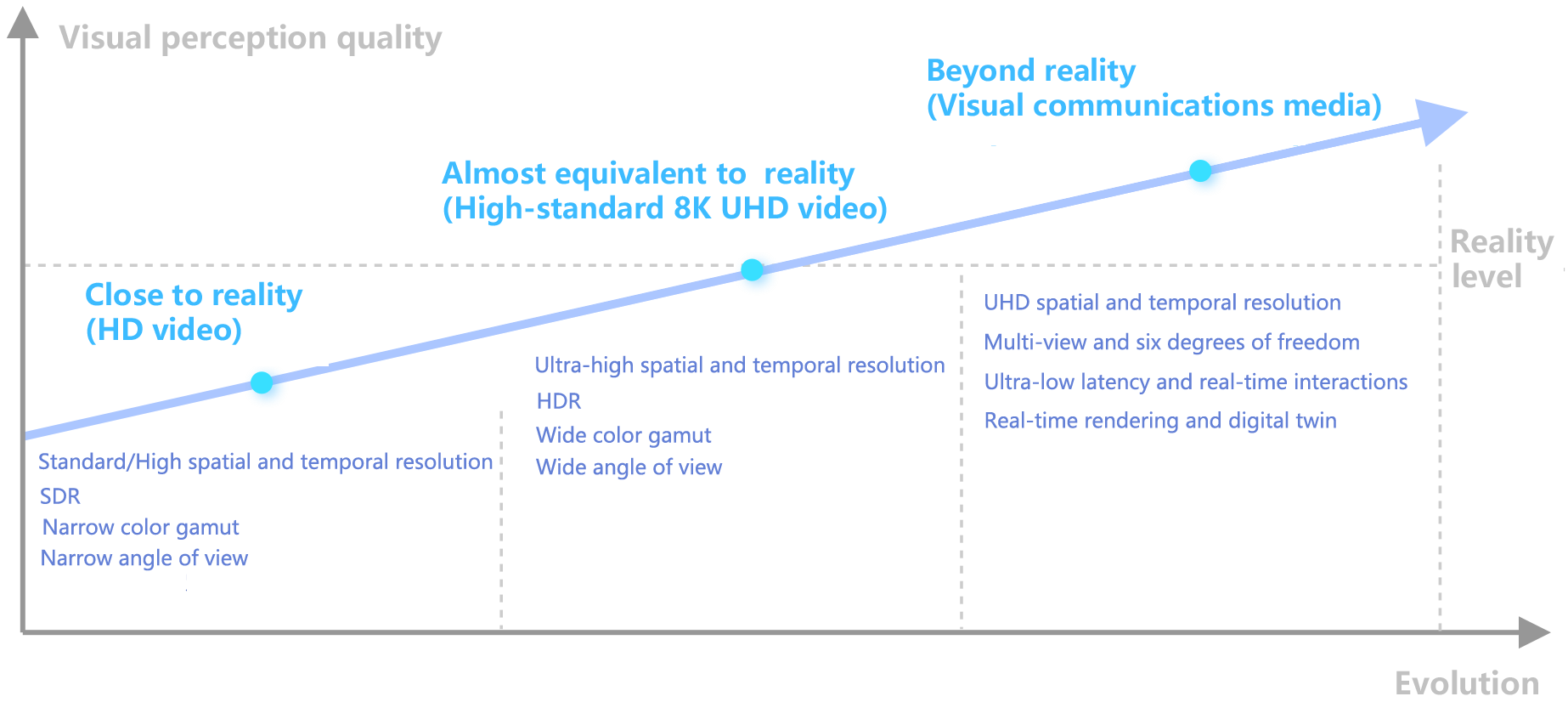

Ultra-high definition (UHD) is based on standard definition (SD) and then high definition (HD) technologies, and serves as the foundation for a myriad of emerging industries, including new media, VR, light field, and naked eye holography. UHD supports a range of AI applications, including smart healthcare and unmanned driving. These new applications and business models provide users with an optimized experience, with double resolution, frame rate, color gamut, and angle of view (AOV). However, the explosive growth of video data poses challenges related to data transmission and storage.

Data Storage and Transmission Are a Thorn in the Side

Massive data

Time-consuming

Video Encoding and Decoding Are a Key Part of the Solution

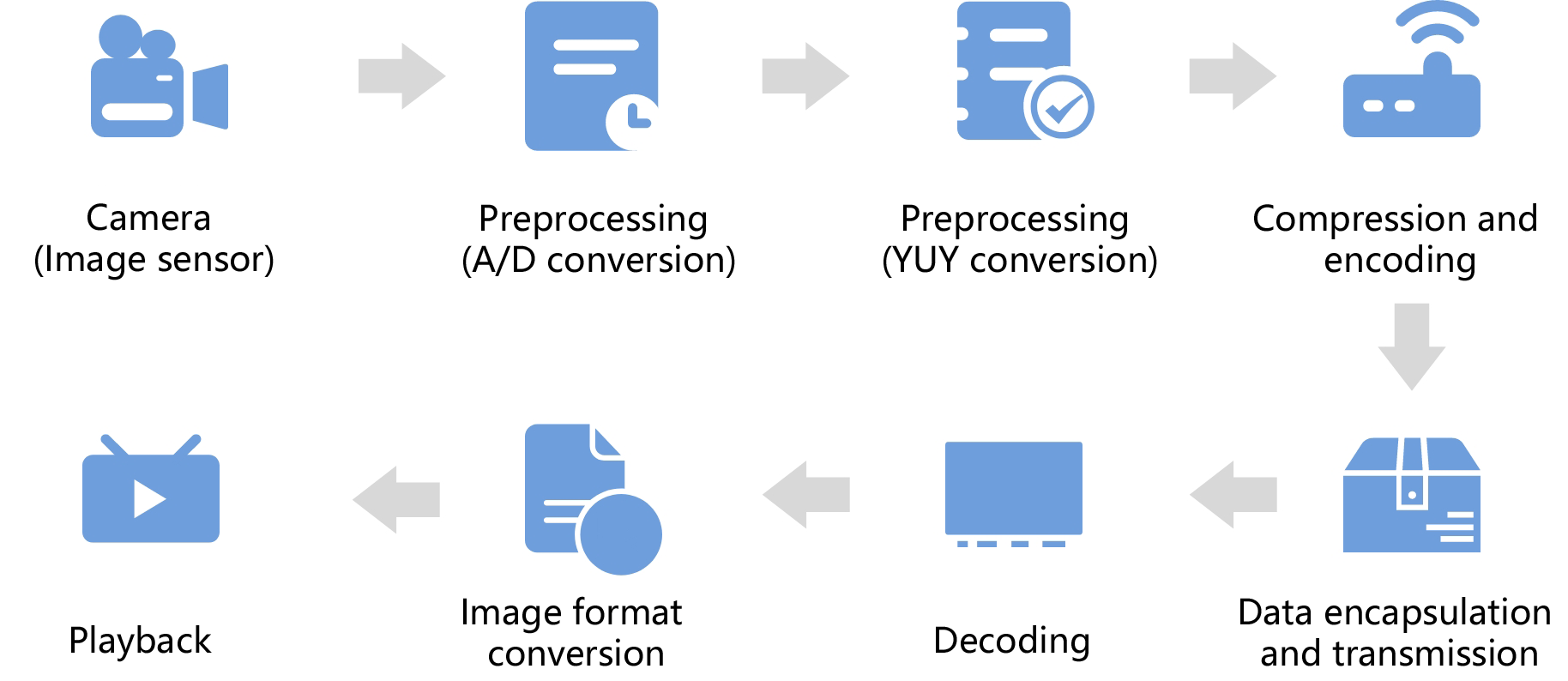

If you are interested in enhancing video quality, or minimizing bandwidth usage without compromising quality, take a look at the principles behind video encoding and decoding. After images are captured and preprocessed by a camera, redundant video data is removed via algorithms. Images are then compressed, stored, and transmitted. What comes next is video decoding and format conversion. This process makes full use of the available computing power, and optimizes video reconstruction quality and compression ratios, in order to meet bandwidth and storage capacity requirements.

Commercial Use of 8K Videos Is Just Around the Corner

Swipe left to view more![]()

Ongoing improvements to communications capabilities have helped bolster UHD displays. 8K is becoming common in audiovisual applications, such as sporting events, live broadcasts, and movies. High resolution and high frame rates bring about a better viewing experience, while also helping facilitate data transmission. The emergence of video encoding and decoding technologies has helped balanced the need for high-quality video reconstruction and efficient video compression, making it possible to implement 8K in commercial scenarios.

History of Encoding and Decoding Technologies

SD

HD

4K UHD

8K VR/AR

SD Era: SD Digital TVs and DVD Players

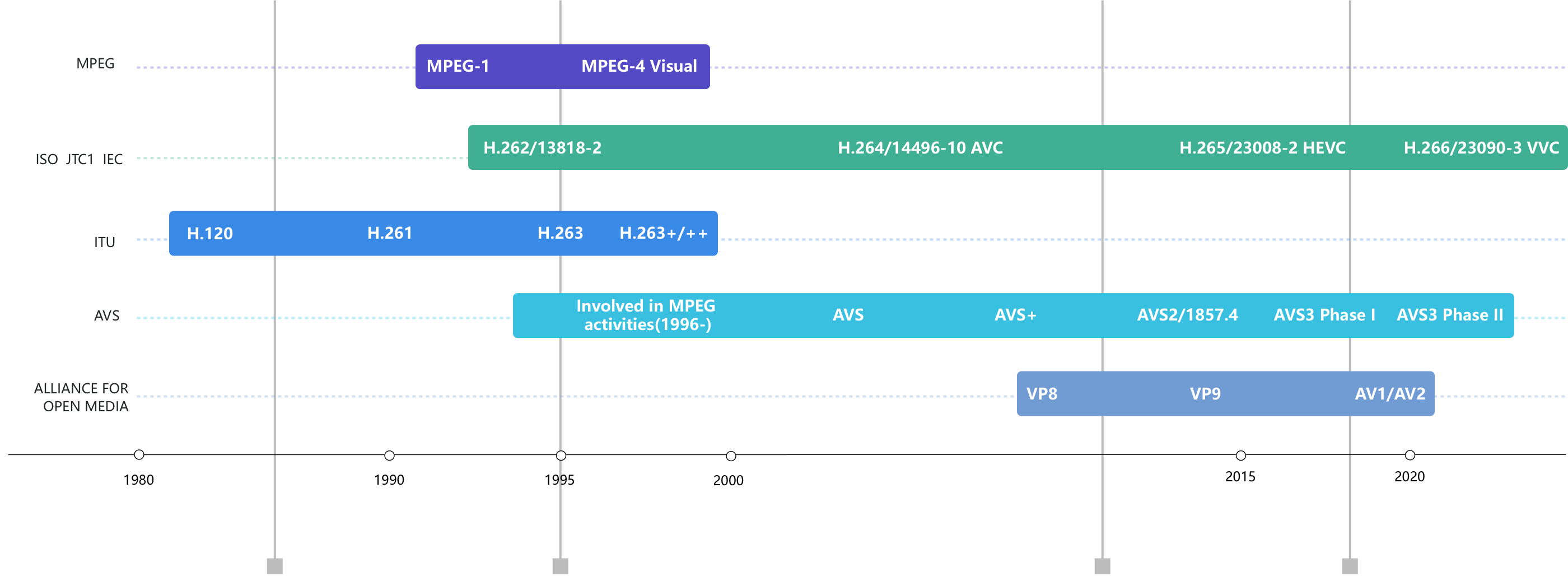

In the early 1990s, ISO/IEC formulated MPEG-1 for low-resolution video conferences and MPEG-2 for SD digital TVs and DVD players.

HD Era: Higher Standards for Satellite Channels

• In the late 1990s, MPEG-4 and H.263 were created in the streaming media field.

• ITU-T and ISO/IEC formulated H.264/MPEG AVC.

• The Audio Video coding Standard (AVS) Workgroup released the first-generation audio and video encoding and decoding standard AVS+ as the counterpart to H.264/MPEG AVC.

4K Era

• In 2013, ITU-T and ISO/IEC formulated HEVC/H.265.

• In 2016, the AVS Workgroup formulated the second-generation standard AVS2 for UHD audio and video encoding. AVS2 performance is nearly twice that of AVS1, and the compression efficiency is equivalent to H.265/HEVC. AVS2 also outperforms HEVC in full-frame encoding and encoding across surveillance scenarios.

8K Era

• In 2017, ISO began formulating the next-generation video compression standard VVC.

• The AVS Workgroup released the third-generation standard AVS3 for 8K and applications.

SD Era: SD Digital TVs and DVD Players

- In the early 1990s, ISO/IEC formulated MPEG-1 for low-resolution video conferences and MPEG-2 for SD digital TVs and DVD players.

HD Era: Higher Standards for Satellite Channels

- In the late 1990s, MPEG-4 and H.263 were created in the streaming media field.

- ITU-T and ISO/IEC formulated H.264/MPEG AVC.

- The Audio Video coding Standard (AVS) Workgroup released the first-generation audio and video encoding and decoding standard AVS+ as the counterpart to H.264/MPEG AVC.

4K Era

- In 2013, ITU-T and ISO/IEC formulated HEVC/H.265.

- In 2016, the AVS Workgroup formulated the second-generation standard AVS2 for UHD audio and video encoding. AVS2 performance is nearly twice that of AVS1, and the compression efficiency is equivalent to H.265/HEVC. AVS2 also outperforms HEVC in full-frame encoding and encoding across surveillance scenarios.

8K Era

- In 2017, ISO began formulating the next-generation video compression standard VVC.

- The AVS Workgroup released the third-generation standard AVS3 for 8K and applications.

Unveiling AVS3

AVS is a series of audio and video encoding and decoding standards formulated by the Audio Video coding Standard (AVS) Workgroup. AVS consists of the technical standards, involving the system, video, audio, and digital copyright management, and supporting compliance test standards. AVS3 is the third-generation audio and video encoding and decoding standard. It adapts to the decoding process corresponding to efficient video compression methods, and in accordance with multiple bit rates, resolutions, and quality requirements. AVS3 has obvious advantages in decoding efficiency, and is widely applied in TV broadcasting, digital movies, network TV, network video, video surveillance, real-time communications, instant messaging, digital storage media (DSM), and still images. It is also the first-ever standard that is tailored to the 8K UHD industry, media applications, and virtual reality (VR) industry.

Tailored to 8K Applications

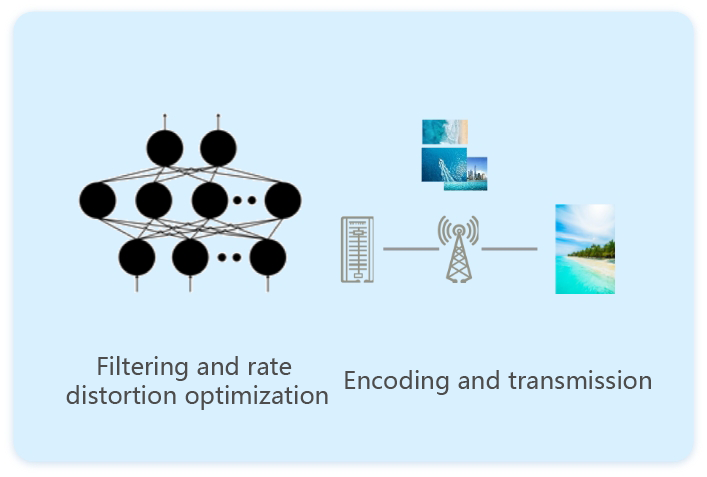

Towards Intelligent Encoding

AVS3 Deep Dive

Block Partitioning

Intra-frame

Prediction

Inter-frame

Prediction

Transform

Block Partitioning: Sharpens Image Prediction Accuracy

• Block partitioning is the process of dividing a complex image into a plurality of rectangular blocks, and effectively encoding and decoding the image by block. In general, an object can be divided into multiple parts. Different parts are predicted using diverse partitioning techniques.

• including Quadtree plus Binary Tree (QTBT), Extended Quadtree (EQT), and Derived Tree (DT). The image can be flexibly divided into slices and coding tree units, according to specific scene. The asymmetric prediction unit in the DT mode effectively shortens the average prediction distance, greatly sharpening the prediction accuracy.

Intra-frame Prediction:

Enhances Prediction Efficiency

Intra-frame prediction is used to predict pixels on the basis of adjacent encoded pixels in the same frame, by utilizing the correlation in the video spatial domain. This effectively removes video temporal redundancy.

IPF

The intra prediction filter (IPF) re-filters the pixels at the boundaries of predicted blocks. It aims to optimize the boundaries of intra-frame blocks, and increase the spatial correlation between pixels, for improved prediction accuracy.

TSCPM

The two-step cross-component prediction (TSCPM) leverages the strong correlation between luminance and chrominance to remove linear redundancy between components.

Step 1: Perform linear scaling on a luma block to obtain the value of a corresponding chroma prediction block Pc′ (x,y). The coefficient α is related to four sample points adjacent to the luma block.

Step 2: Perform downsampling on the chroma prediction block to obtain a final value Pc.

Inter-frame Prediction: Increases Accuracy of Motion Vector Predictions

Inter-frame prediction is used to predict pixels on the basis of the encoded pixels in an adjacent image, by utilizing the correlation in the video time domain. This effectively removes video temporal redundancy.

AMVR

Adaptive motion vector resolution (AMVR) adaptively selects the motion vector resolution of 1/4 pixel, 1/2 pixel, 1 pixel, 2 pixels, and 4 pixels at the encoding end, according to the image attributes. Compared with the fixed resolution, AMVR reduces the data resulting from the encoding of the motion vector difference.

HMVP

History-based motion vector prediction (HMVP) adds an HMVP candidate list consisting of eight motion vectors, in addition to the spatial candidate list and temporal candidate list. The motion vectors are arranged in first input first output (FIFO) mode. Before a new candidate is added, the system checks whether the same MVP exists. HMVP adds a decoded block that is close but not adjacent to the current block from the candidate list, thereby increasing the efficiency of motion vector prediction.

UMVE

Ultimate motion vector expression (UMVE) fine-tunes the stride and direction of the basic motion vector in skip mode or direct mode, which conserves the bit rate and improves encoding quality. UMVE searches L0 and L1 in four directions and five distances, by using the position of basemv as the starting point.

Four-parameter AMC

Affine motion compensation (AMC) is classified into four-parameter and six-parameter AMC models, which encode motion vectors/motion vector differences of two or three control points respectively. The results of other points are obtained from calculations. AMC efficiently shows complex motions, such as scaling and rotation. The motion vector resolution is 1/16 pixel. Motion compensation based on 8 x 8 (unidirectional, bidirectional) or 4 x 4 (unidirectional) blocks is performed for coding units (CUs) greater than 16 x 16. A fixed size facilitates hardware implementation.

Transform: Improves the Encoding Efficiency of Residuals

DCT8 or DST7 transform

PBT partition

Position based transform (PBT) performs DCT8 or DST7 transform according to the position of the sub-blocks of the residuals in the inter-frame prediction blocks. Each sub-block uses the pre-designed transform set according to its position, to provide a more efficient fit for the inter-frame residual feature.

Block Partitioning: Sharpens Image Prediction Accuracy

- • Block partitioning is the process of dividing a complex image into a plurality of rectangular blocks, and effectively encoding and decoding the image by block. In general, an object can be divided into multiple parts. Different parts are predicted using diverse partitioning techniques.

- • including Quadtree plus Binary Tree (QTBT), Extended Quadtree (EQT), and Derived Tree (DT). The image can be flexibly divided into slices and coding tree units, according to specific scene. The asymmetric prediction unit in the DT mode effectively shortens the average prediction distance, greatly sharpening the prediction accuracy.

Intra-frame Prediction: Enhances Prediction Efficiency

Intra-frame prediction is used to predict pixels on the basis of adjacent encoded pixels in the same frame, by utilizing the correlation in the video spatial domain. This effectively removes video temporal redundancy.

IPF

The intra prediction filter (IPF) re-filters the pixels at the boundaries of predicted blocks. It aims to optimize the boundaries of intra-frame blocks, and increase the spatial correlation between pixels, for improved prediction accuracy.

TSCPM

The two-step cross-component prediction (TSCPM) leverages the strong correlation between luminance and chrominance to remove linear redundancy between components.

Step 1: Perform linear scaling on a luma block to obtain the value of a corresponding chroma prediction block Pc′ (x,y). The coefficient α is related to four sample points adjacent to the luma block.

Step 2: Perform downsampling on the chroma prediction block to obtain a final value Pc.

Inter-frame Prediction: Increases Accuracy of Motion Vector Predictions

Inter-frame prediction is used to predict pixels on the basis of the encoded pixels in an adjacent image, by utilizing the correlation in the video time domain. This effectively removes video temporal redundancy.

AMVR

Adaptive motion vector resolution (AMVR) adaptively selects the motion vector resolution of 1/4 pixel, 1/2 pixel, 1 pixel, 2 pixels, and 4 pixels at the encoding end, according to the image attributes. Compared with the fixed resolution, AMVR reduces the data resulting from the encoding of the motion vector difference.

HMVP

History-based motion vector prediction (HMVP) adds an HMVP candidate list consisting of eight motion vectors, in addition to the spatial candidate list and temporal candidate list. The motion vectors are arranged in first input first output (FIFO) mode. Before a new candidate is added, the system checks whether the same MVP exists. HMVP adds a decoded block that is close but not adjacent to the current block from the candidate list, thereby increasing the efficiency of motion vector prediction.

UMVE

Ultimate motion vector expression (UMVE) fine-tunes the stride and direction of the basic motion vector in skip mode or direct mode, which conserves the bit rate and improves encoding quality. UMVE searches L0 and L1 in four directions and five distances, by using the position of basemv as the starting point.

Four-parameter AMC

Affine motion compensation (AMC) is classified into four-parameter and six-parameter AMC models, which encode motion vectors/motion vector differences of two or three control points respectively. The results of other points are obtained from calculations. AMC efficiently shows complex motions, such as scaling and rotation. The motion vector resolution is 1/16 pixel. Motion compensation based on 8 x 8 (unidirectional, bidirectional) or 4 x 4 (unidirectional) blocks is performed for coding units (CUs) greater than 16 x 16. A fixed size facilitates hardware implementation.

Transform: Improves the Encoding Efficiency of Residuals

Position based transform (PBT) performs DCT8 or DST7 transform according to the position of the sub-blocks of the residuals in the inter-frame prediction blocks. Each sub-block uses the pre-designed transform set according to its position, to provide a more efficient fit for the inter-frame residual feature.

AVS3 Benefits

50% higher compression efficiency

Ideal for UHD applications

One-stop patent pool solution

Halved bandwidth

50% less bandwidth when

transmitting the same picture

Halved storage

50% less memory footprint

when storing the same picture

Upgraded PQ

Better PQ under the

same conditions

The AVS system facilitates a 50% improvement in

compression efficiency at every iteration.

AVS3 HPM4.0 vs AVS2 RD19.5

- UHD: 24.3% improvement in performance under AVS3. 1080p/720p: 23+% improvement in performance under AVS3.

- Same subjective quality: 23% reduction in bit rate under AVS3.

Source: AVS Workgroup

AVS3 HPM10.1 vs HEVC HM16.20

- 4K: Bit rate for AVS3 is about 35% higher.

- 1080p: Bit rate for AVS3 is about 30% higher.

- Same subjective quality: 40% reduction in bit rate under AVS3.

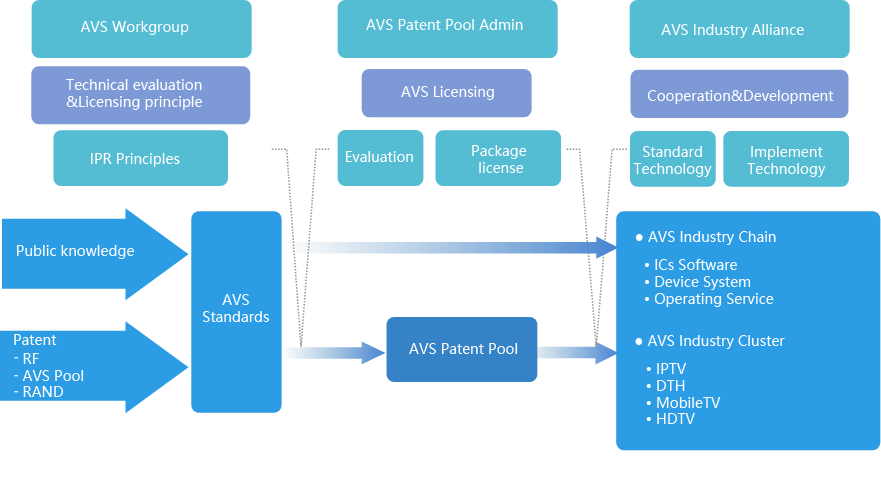

The AVS licensing mode is set prior to standard formulation, helping avoid potentially complex and stringent patent licensing challenges in advance, that might occur during the industrialization process.

Clear patent licensing policies

All units that submit technologies and proposals to the AVS Workgroup must commit to their patent licensing intent, with "free use" or "joining the AVS patent pool"

The licensing mechanism is simple and efficient.

Low patent licensing fees

Only devices are charged.

Content is not charged.

Carriers do not need to pay.

No patent fee is charged for Internet software services.

Available and controllable patents

Technologies from members are utilized, substantially mitigating patent-related risks.

Duplicate declaration is avoided to ensure the uniqueness of the patent pool.

Halved bandwidth

50% less bandwidth when transmitting the same picture

Halved storage

50% less memory footprint when storing the same picture

Upgraded PQ

Better PQ under the same conditions

The AVS system facilitates a 50% improvement in compression efficiency at every iteration.

AVS3 HPM 4.0 vs AVS2 RD 19.5

• UHD: 24.3% improvement in performance under AVS3.

• 1080p/720p: 23+% improvement in performance under AVS3.

• Same subjective quality: 23% reduction in bit rate under AVS3.

Source: AVS Workgroup

AVS3 HPM 10.1 vs HEVC HM 16.20

• 4K: Bit rate for AVS3 is about 35% higher.

• 1080p: Bit rate for AVS3 is about 30% higher.

• Same subjective quality: 40% reduction in bit rate under AVS3.

The AVS licensing mode is set prior to standard formulation, helping avoid potentially complex and stringent patent licensing challenges in advance, that might occur during the industrialization process.

Clear patent licensing policies

- All units that submit technologies and proposals to the AVS Workgroup must commit to their patent licensing intent, with "free use" or "joining the AVS patent pool" permitted.

- The licensing mechanism is simple and efficient.

Low patent licensing fees

- Only devices are charged.

- Content is not charged.

- Carriers do not need to pay.

- No patent fee is charged for Internet software services.

Available and controllable patents

- Technologies from members are utilized, substantially mitigating patent-related risks.

- Duplicate declaration is avoided to ensure the uniqueness of the patent pool.

Industry Applications

Suzhou CSL E2E 8K Live Broadcast Trial

HiSilicon conducted the successful AVS3 8K live broadcast trial of the Chinese Football Association Super League (CSL) final in Suzhou Olympic Sports Centre, along with Jiangsu Broadcasting Cable Information Network Corporation Limited, National Radio and Television Administration UHDTV Research and Application Laboratory, China Sports Media, Arcvideo Tech, and BOE. It was the first-ever E2E 8K live broadcast trial of a major sporting event in China, and streamlined the process of AVS3-based 8K live broadcasting, encompassing capture, production, encoding, modulation, transmission, indoor reception, decoding, and playback.

CCTV 8K Channel Trial

The Spring Festival Gala has become a time-honored tradition in China, and the 2021 program, which marked the Year of the Ox, was aired via 8K ultra HD (UHD) live broadcasting technology. With the unveiling today of an 8K UHD TV channel by China Media Group (CMG), the broadcast will be supported by technology provided by HiSilicon (Shanghai), Peng Cheng Laboratory, and the Guangdong Bohua UHD Innovation Center, among other partners. As the industry's first-ever 8K UHD video capture, production, transmission, and live TV broadcast for the public, this year's Spring Festival program represented a milestone in the history of the UHD video industry. 8K UHD video is the latest technological paradigm, following in the footsteps of digital and HD video. HiSilicon's technology has enabled the CMG to establish an end-to-end 8K UHD pilot live broadcast system.

Related Products

Related News

HiSilicon and Sichuan Telecom Jointly Launch 8K+VR/AR Smart Media Devices

HiSilicon Wins the 2019 AVS Industry Technology Innovation Award

HiSilicon and Sichuan Telecom Jointly Launch 8K+VR/AR Smart Media Devices

Recommended

Meet 8K, a New Way to Perceive Our World

With sharper edges, more vibrant colors, and broader field of view, HiSilicon 8K puts smart home interactions front and center.

HDR Vivid: True to Life Vision

HDR Vivid delivers highly-accurate dynamic range mapping for display devices, by adding dynamic metadata, maximizing the original HDR source effects.

Display Innovation that Knows No Bounds

HiSilicon's Hi-Imprex Engine brings next-level picture quality to expansive displays.

*Source: AVS M6296, March 2021.

Follow US

Contact Us

-

Shanghai

Room 101, 2 Hongqiaogang Road, Qingpu District, Shanghai

+86 755 28780808

support@hisilicon.com

-

Shenzhen

New R&D Center, 49 Wuhe Road, Bantian, Longgang District, Shenzhen

+86 755 28780808

support@hisilicon.com

This site uses cookies. By continuing to browse the site you are agreeing to our use of cookies. Read our privacy policy